RESEARCH

Arduino Animatronic Eye Mechanism

In this video, Will Cogley shows us his 3D printed creation: A set of eyes that realistically move and close using servos and an Arduino. Inspired by eye mechanisms in puppeteering he managed to create a set of super realistic looking and moving eyes that can be controlled by a joystick. The looks are amazing and it is easy to forget that this is electronic and not real, that’s how realistic this looks. This might not be a creature itself, but these eyes can be incorporated in any robot head or creature. However, one might argue that these eyes by themselves could be a creature.

JURASSIC WORLD: Building the Apatosaurus

Legacy Effects is a company creating amazing movie props. In this video they show how they created the Apatosaurus which is dying in the movie Jurassic World. To my surprise, this dinosaur wasn’t computer generated, it was an actual physical moving prop (animatronic). The guys working on this also created the famous T-rex from the original movie Jurassic Park. There is a lot of work that goes into creating a realistic looking and acting creature for the big screen, but in my opinion it is totally worth it and one of the best applications of artificial creatures (even if they’re controlled.

AI Humanoid Robot Sophia by Hanson Robotics

I used to be intrigued by this artificial intelligent humanoid robot made by the Hong Kong based company Hanson Robotics, since it’s quite scary in how similar it is to us humans. After being activated in 2016, it went to speak to the united nations and it even got to become a citizen of Saudi Arabia in 2017. but as I’m watching these videos again, I’m getting really sceptical whether this is a true AI robot or whether it is programmed or controlled by the company.

After looking further into my scepticism, I found out that I wasn’t the only one being sceptical of Sophia being controlled by a true AI. In this video by BitsBytesBobs (https://www.youtube.com/watch?v=CE4pGzmucig), Bob breaks down in reasonable arguments that Sophia is not a true AI. Both him and the head of Facebook’s AI department agree that this is simply a publicity stunt to get more attention towards AI and Hanson robotics. I quote from this video: “She is just a side-show, not the real event, but a puppet of motors and latex, designed to give the impression of AI’s advancements ahead of the curve. Ahead of Google, ahead of Facebook and many others. It’s a facade, a promotion of lies with the intent to promote the company behind it. In essence it’s BS (bullshit) dressed up as scientific advancement.”

Knowing that experts in the field also think this is not true AI is enough for me to lose all faith in Sophia and what she stands for. In the end, it’s not about AI or technological advancements but it's all about money once again. In my opinion (like Bobs) this hurts the way people perceive technological advancements in general, since it seems hard to trust these companies.

Also CNBC made a video about this topic listing some clear arguments (ones that Bob also used) and concluding that instead of promoting AI advancements it is very misleading and might hurt the view of the general public on AI. If interested in more information, check out the video: https://www.youtube.com/watch?v=7fnCQC7bLs0

TINKERING

DEMO I

For my first demo, I wanted to see if I can use the data from computer vision in Processing to control one servo. My idea is that these servos would follow your face as you move it around.

I managed to make it how I wanted, by using the LiveCam example of the computer vision in Processing, then mapping the X value from a recognised face to a servo scale (0-160) and sending that over to the Arduino over serial. It works, but only until the X value of the recognised face goes over 200, then the servo resets for some peculiar reason.

As a bonus I tried to see if the software would recognise my cat’s face and it did, awesome!

DEMO II

For my second demo, I wanted to try to create a simple creature in Processing. Staying with eye tracking (for some reason this really attracts me).

I ended up with a face on the screen with eyes that will follow your mouse around. When you click the screen, the face will open his mouth and when you click again it will close again.

DEMO III

For the last demo (sticking with eyes), I made a shy-eye. This eye will look away according to how close your hand is to it. I managed to do this by mapping the distance from the ultrasonic sensor from 30 to 1 to the servos range (0-160). It is not that complicated but very effective.

Design And Build

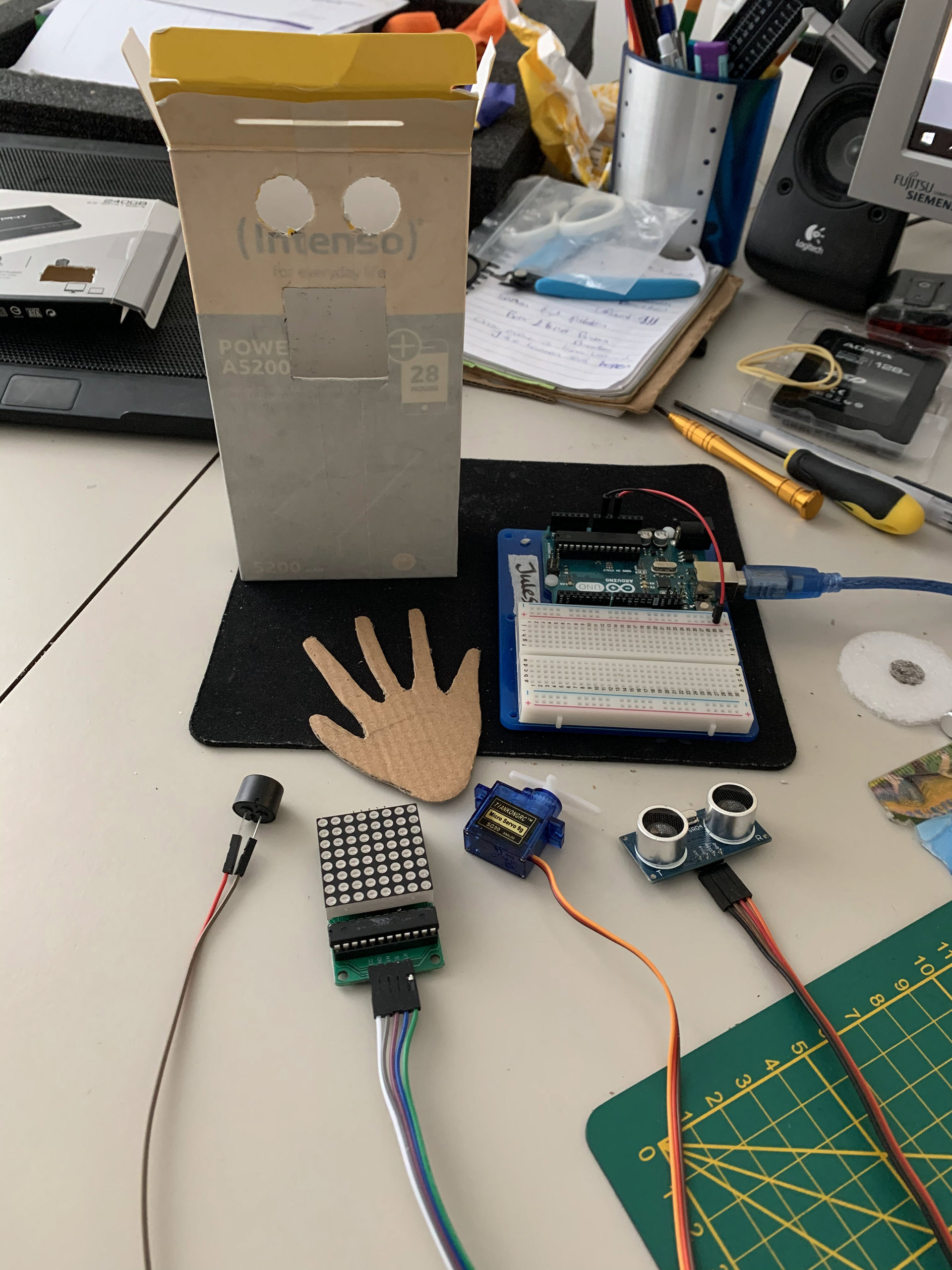

The idea for my creature would be a desk pet. You can wave at him to make him happy and he will wave back.

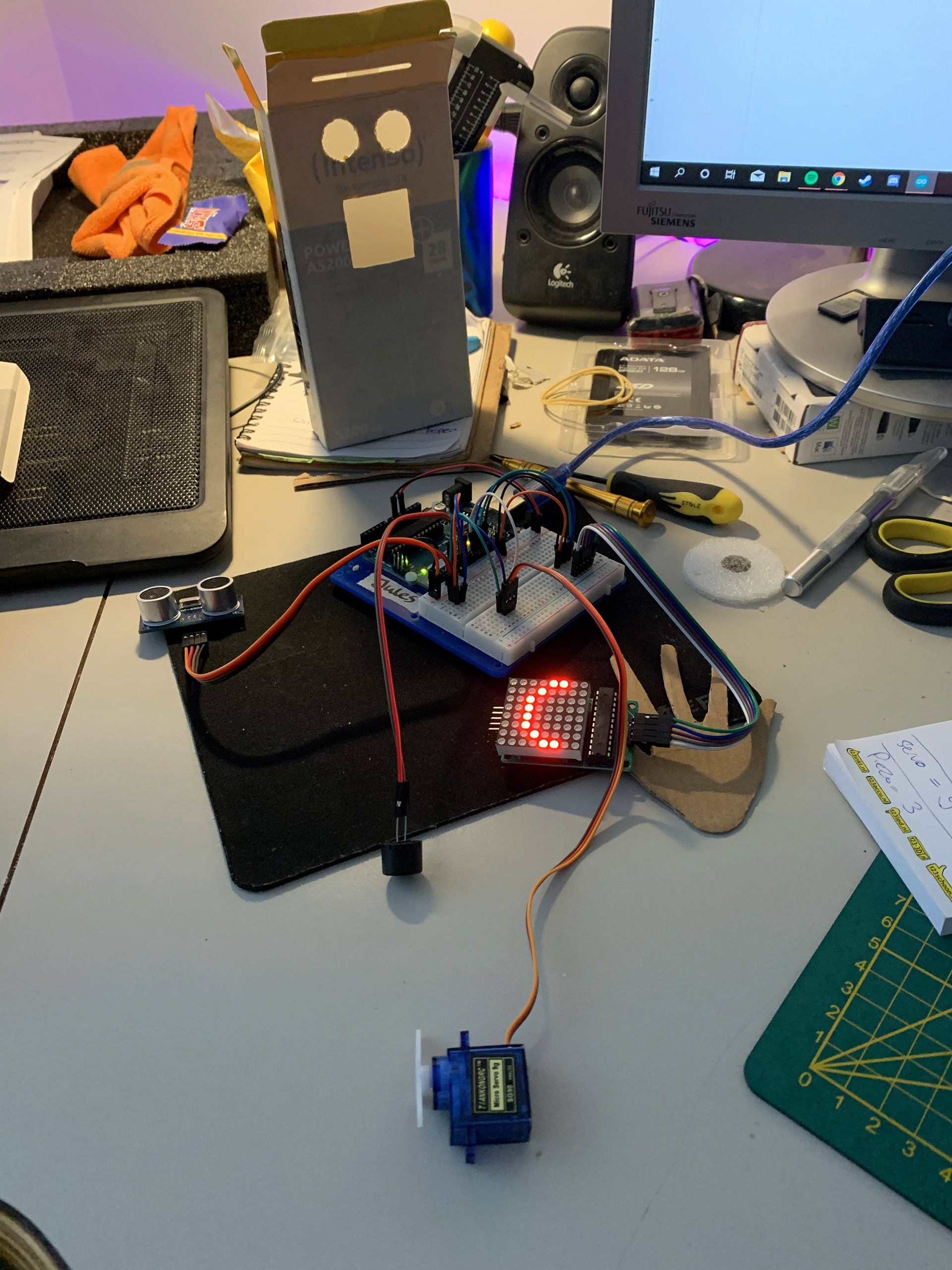

I want to do this using the Ultrasonic sensor, LED Matrix, Servo and the Piezo speaker. I would count the times something comes close to the Ultrasonic sensor by waving at it (the distance will go from far, to close, to far again). When it counts 5 times a hand passing by (waving) it will be happy, make a sound and then wave back at you!

The way I would do this, is by creating an if-statement where every time the distance to the censor is within range, it would add 1 to a variable. Using another if-statement I can then check when this variable is at 5 and then trigger the servo, piezo, update the matrix and reset the variable. The face will stay happy for a little while before going back to being sad

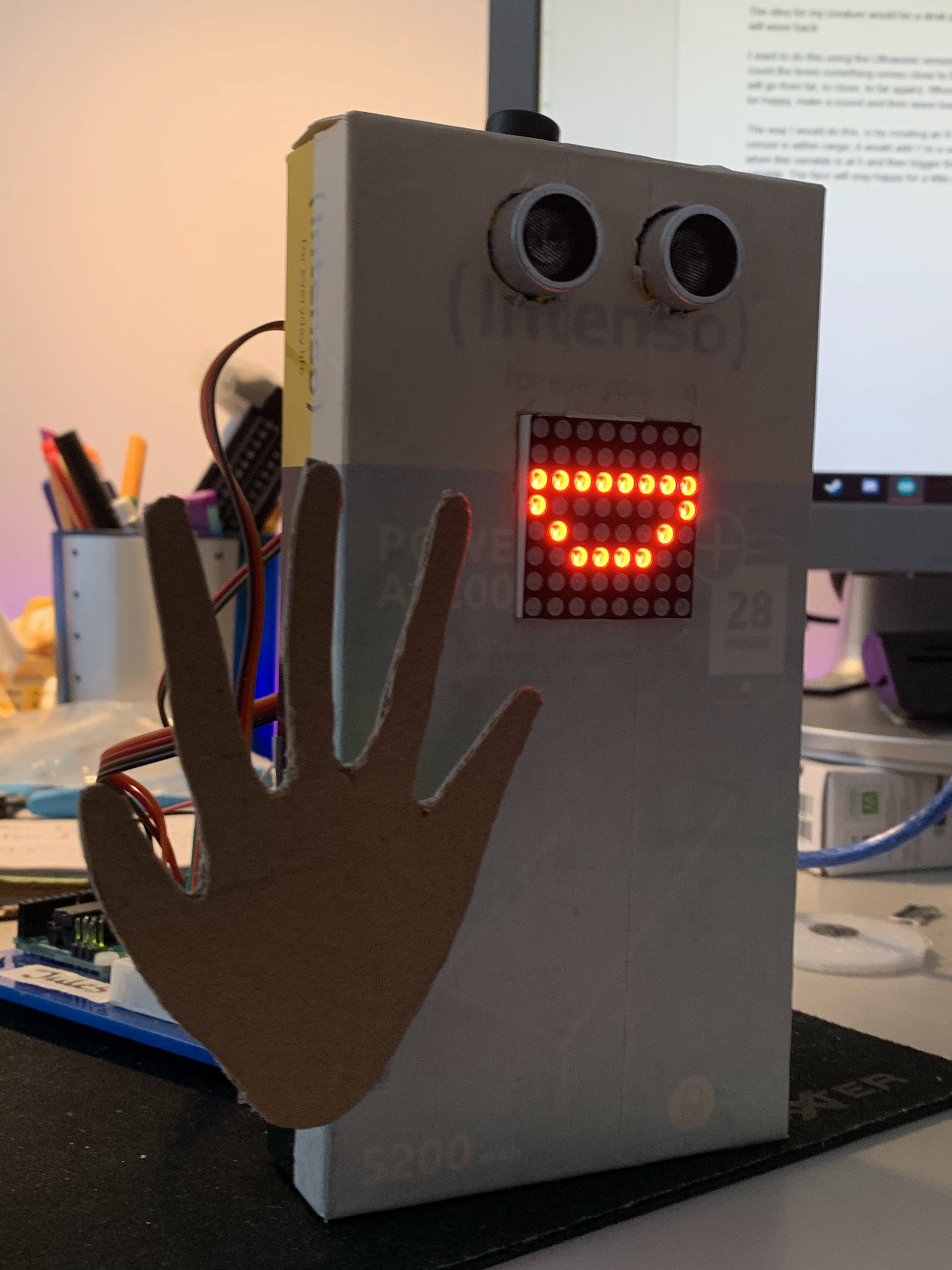

People might notice that this little guy’s hand is the wrong way around, but since this is not a human being, his hands are just like this… This species is specified by having their thumb on the wrong side!

Also, for the last challenge I included the schematic of my circuit via Fritzing. This time I didn’t do this because it wasn’t that necessary in my opinion, since all components were directly wired to the Arduino, so there was no real circuit going on on the breadboard.

SHOWCASE + CODE

REFLECTION

MY VISION ON ARTIFICIAL CREATURES

AI is in my opinion the future. A future we have to be aware of and be careful with. The possibilities of AI are endless, but can also be dangerous... In this case we're not only talking about AI, but artificial creatures specifically. The concept of artificial creatures is still a bit vague though, since we can't say what is and what isn't a creature; is Alexa or Google Home a creature? Is your phone a creature? Or are robots the only artificial creatures? We must answer these questions first, but in my opinion, for the sake of this challenge, I chose to use the definition of a robot-like creature. Some technology we can familiarize as a creature, is a creature.

MY FINAL PRODUCT

This is the reason I build something that has human feature that we can recognize and are familiar with like eyes, mouth (smiling and sad) and a hand. I think that my creature resembles a creature and if you would ask anyone what they see when looking at my creation, they would say something in the lines of a 'creature'. I'm also happy that I managed to give this little guy some emotions we can familiarize with as humans.

MY EXPERIENCE WITH ARDUINO

In my opinion Arduino is great to create a small moving/interactive creature with. I also successfully managed to create what I had in mind and even extended my coding skills in this challenge. Never before have I used an extra void to write actions inside and call this void later. Also, the way I managed to recognize the waving with the use of the ultrasonic sensor is something I'm proud of. The way it is able to count the waves is great in my opinion and I would definitely use this technology again in the future, but mainly for prototyping or making gadgets for at home. I don't see the professional use of Arduino, since in most cases it would make more sense to use a Raspberry Pi which is more capable and uses a more elaborate coding language.